Welcome to Cautious Optimism, a newsletter on tech, business, and power.

Happy Monday! Our economic calendar came out this morning. It’s going to be a big week. Tesla earnings are on Tuesday. Alphabet and Intel report later in the week. We’re about to learn a lot about AI spend, in other words. All eyes will be on Tesla’s ability to grow despite brand issues and rising competition, and how Alphabet will frame its capex viz its AI revenues. Intel just has to pitch a competent plan to rebuild, so expect its earnings call to be well-scrutinized. The IPO calendar is bereft and deals thin, but there’s still a lot going on. To work! — Alex

📈 Trending Up: Tiny shocks … Tapcheck … Revel … Bengaluru real estate … Crux … chip wars in China … oops … holding fast … free trade in India? …

📉 Trending Down: Our linguistic differences … clean governance … US-China relations … the DoD … Academic freedom … no, really … Boeing sales in China …

The US stock market, in pre-market trading.

Quote of the Day: “But now that a new paper shows that AI passes the Turing Test, we need to admit that we really don’t know what that actually means.”

I thought we wanted this?

Over the weekend a startup announced itself to the world, and didn’t quite get the reaction that it anticipated. In terms of reach, Mechanize likely crushed its goals. Its tweets did numbers, and TechCrunch picked up its launch.

But discussion didn’t center on just how Mechanize might fulfill its goal of “developing virtual work environments, benchmarks, and training data that will enable the full automation of the economy.” No, instead, some folks were pretty irked that a company wants to replace all human labor in the economy.

Detractors called the company “traitors to humanity,” while other posters called its work anti-worker and a generally bad idea. The best response read as follows: “Unsure if this will be net positive. How does one invest?”

The only sin that Mechanize committed was saying what others in technology are thinking, out loud. I wonder who, watching the pace at which AI is advancing and where it has already fit into the economy to mechanize white-collar work (programming, writing, etc), and blue-collar work (robotics, etc), thinks to themselves this won’t displace humans.

So, we’re arguing about degrees and timing. I have no precise view on Mechanize per se, but I thought people wanted more automation? The market does, at least.

The standard argument at this juncture goes as follows:

Complaint: Automation is going to ruin human livelihoods!

Rejoinder: Despite many technological revolutions, we’re still at nearly zero unemployment in the United States; ergo, the next technological revolution will not obviate the need for human labor.

Complaint: AI’s impact on both physical and intellectual work is designed to replace humans, not augment them, making it a different sort of sea change than we’ve seen before. The industrial revolution still needed lots of humans, after all.

No one knows what is coming next. Will this time be different than prior revolutions in work? At the risk of tempting history to laugh, I think it is. The current focus in corporate America is to reduce human inputs to a step or two below minimum viable staffing levels, and plow the rest of the capital into trying to automate even those gigs.

Anti-human? Hard to say that since we humans are the ones building AI technology. The simplest stance to take is this: Economic incentives are driving AI innovation and adoption; those forces are not slowing; so, how can we build a better world for humans next to a more automated economy? Mechanize is just part of the wave; it didn’t shake the Earth.

There’s still a split on how tech is used in technology-land

Y Combinator founder Paul Graham’s views on the Israeli-Hamas war in Gaza differ from many of his colleagues in the technology investing landscape. Few are the other voices at his level of stature in startups that have been as publicly critical of Israel’s wartime tactics, for example.

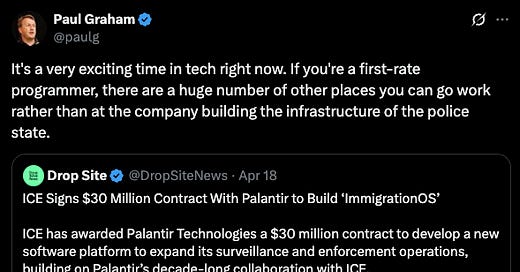

Graham has largely managed to avoid blowback, apart from dismissive tweets as far as I can tell, but his views are contra-normal in more ways than simply geopolitical. To wit, Graham penned the following heading into the weekend:

Palantir struck back with an essay on X that included various riffs on famous PG chestnuts — build something people want and so forth. The argument was the one we’ve seen tread before: When does technology go from morally neutral to morally negative, and when should we put human privacy ahead of government visibility?

As Palantir points out, Google ran into the issue back in 2018, but it has since moved away from caution when it comes to defense contracts. Microsoft recently got hit with a complaint by some of its workers about use of its technology by the Israeli military; the protestors were fired.

I am of mixed views here. I am in favor of startups building weapons systems, for example. My brother is active duty, so I am biased in favor of the use of tech to keep our soldiers safe. That said, especially in the current political context of thought-crime and virulent Federal recrimination for even irking POTUS, I am incredibly skeptical of mass-surveillance systems in particular.

Which, Palantir is seemingly working on. From the company’s own X missive (emphasis added) regarding the work in question:

We do collectively believe that competence in our government is a prerequisite for our way of life and assume the burden of falling forward on the goal to provide it every single day. We are in a situation today where no matter the immigration policy the citizenry votes for, it cannot be executed; reality has largely been divorced from the statutes for decades now. This is first a question of competence, not politics. If the electorate cannot steer the execution of our government because the government cannot execute, our institutions lose all credibility, and the risk moves from an X debate to a much more fundamental one. We work to attach the steering wheel to the car and revitalize the institutions our societal fabric depends upon. We are looking for people who read something like this post and think it is crazy to demonize working towards a more effective government.

People need to stop talking like born-again Manichaeans — if we don’t have utter border control, we don’t have a nation, etc — the rhetorical method isn’t useful. I don’t buy the “our institutions lose all credibility” argument. For example, lots of folks don’t stop entirely at stop signs. Our traffic laws, amazingly, have not lost all their credibility despite some scofflaw activity.

I think there’s a huge amount of space for folks like Graham who are unabashedly pro-technology and anti-blanket surveillance. In fact, I think we need to ensure that there is space for such voices. We don’t want the tech sector to become little more than government water carriers; if we do, we’ll lose encryption, privacy, and more.

That so many of the anti-Biden folks in tech who complained about too-active a government are also in the yeehaw Palantir camp should not surprise. No. Those folks want to be left alone themselves, while others are held to the law, strictly. It’s a bit like a CEO demanding RTO and then not showing up. Rules for thee, personal freedoms for me.

So I think it’s perfectly reasonable to be in favor of startups building great tools for our warfighters like cheaper drones, ways to avoid GPS jamming, and even space defense technology. I just don’t think we need to throw up our arms and give our government easier ways to ape the panopticon, and call anyone opposed effectively anti-patriotic and in favor of drug overdoses.